26/02/2013 A look at OpenGL and Direct3D performance with Unigine

RSS Feed ~ Comment: by email - on Twitter ~ Share: Twitter - Facebook - Linked In - Google+ - Permanent link

Is OpenGL faster than Direct3D?

Last summer we had a quite stunning news coming from Valve saying that Left 4 Dead 2 is almost 20% faster on Linux than on Windows. Quickly this news has been interpreted by "OpenGL is faster than DirectX".

I really can't believe this claim and I don't believe the days where this will happen are near. Direct3D mainly has to support an ecosystem mades of games which is very restricted context. Furthermore, we can expect that these programmers are relatively good or at least better than the average OpenGL programmer who sometime use OpenGL to display something but with no care at all about how efficient is the code because rendering is not the purpose of the program.

Supporting these inefficient programs efficiently results in slower OpenGL implementations which can't just focus on making fast code running faster. Afterall, optimization is about making slow code run faster. Making these optimizations result in making the "fast path" go slower. If we really want better OpenGL drivers, what we need is programmers that write better OpenGL programs. This is one way to explain why the OpenGL core profile has been created but considering the low adoption of the core profile, I guess the OpenGL ecosystem doesn't care too much about writing fast OpenGL code. Then the only real Core Profile implementation is Apple OpenGL 3.2 implementation, others are just compatibility profile with extra error checking. How could we expect better performance? The chicken and the egg...

The case of Left 4 Dead 2 is interesting because it was a major title but this game is built on an old engine design for Direct3D 9. Chances are that IVHs are more interested in optimizing Direct3D 11 implementations but also Direct3D 11 reflects better how today's hardware works. The release of Unigine Heaven 4 and Unigine Valley 1 is another opportunity to evalute the performance difference between OpenGL and Direct3D especially considering that the engine is designed for Direct3D 11 hardware and support Direct3D 9 and OpenGL 4. We can still argue that Unigine is using OpenGL 4.0 which remain a significant subset of OpenGL 4.3 which makes it an unfair comparison but this is the best we curently have.

Comparing OpenGL 4.0 with Direct3D 9 and Direct3D 11 implementations with Unigine

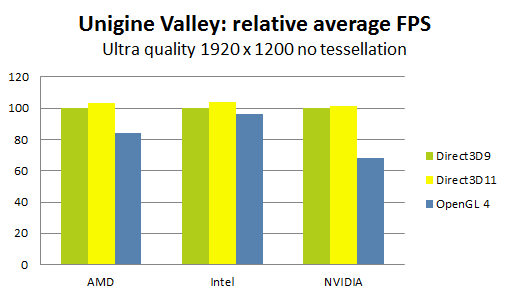

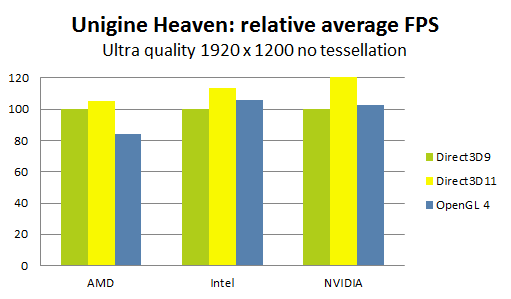

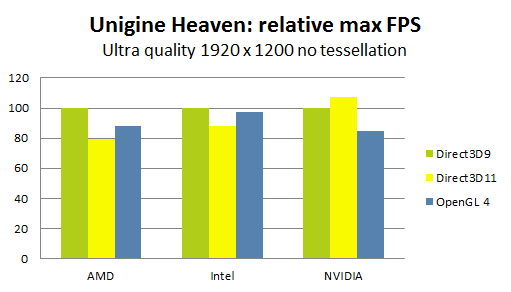

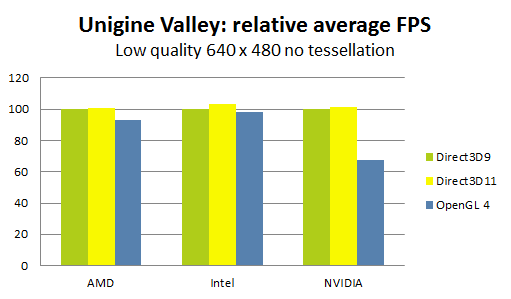

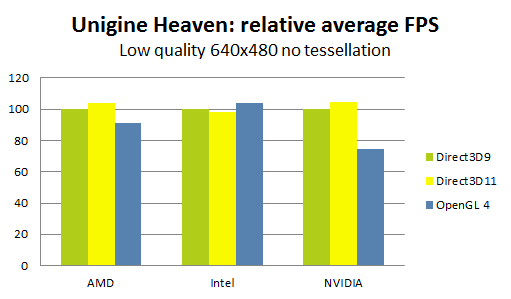

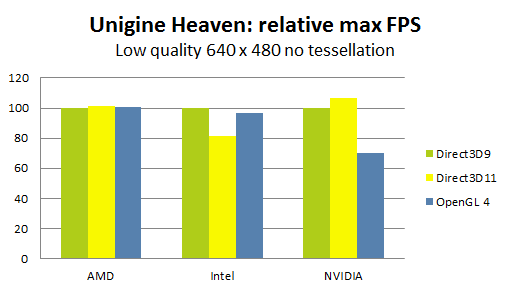

In these charts we don't care about the absolute level of performance, we only consider the relative level of performance, arbitrary based on the results given using the Direct3D9 version of Unigine engine.

Generally speaking, the Direct3D 11 version is faster for every vendors, especially on Heaven benchmark. Considering that Heaven has been around for a while, this difference might only be due to IHVs drivers optimizations. The OpenGL drivers seem to be particularly optimized for Heaven on Intel and NVIDIA implementations. On Heaven, the OpenGL renderer performs better than the Direct3D 9 renderer but not on Valley. I can personally expect that no vendor will care too much about optimizing the Direct3D 9 renderer of these benchmarks

AMD drivers implementation behaves very similarly between Valley and Heaven. Sadly, the OpenGL drivers fall behind the Direct3D 11 implemenstation by 19.4%.

NVIDIA OpenGL implementation performs quite badly on Valley, being about 33.7% slower than the Direct3D 11 implementation. If we assume that the performance drop we see on Valley is not present on Heaven is due to drivers optimizations by NVIDIA, it means that the implementation can get the performance however for most of us, NVIDIA won't care about our program performances and we will be stuck with very bad performances.

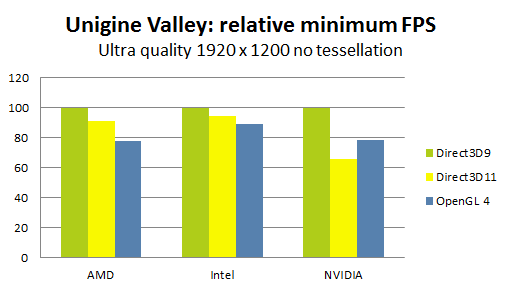

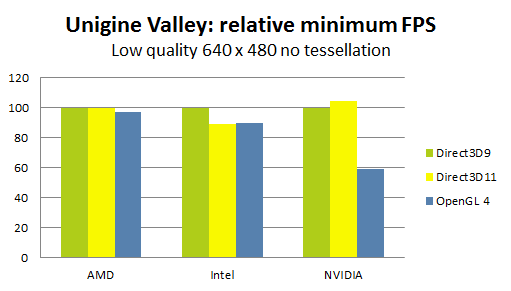

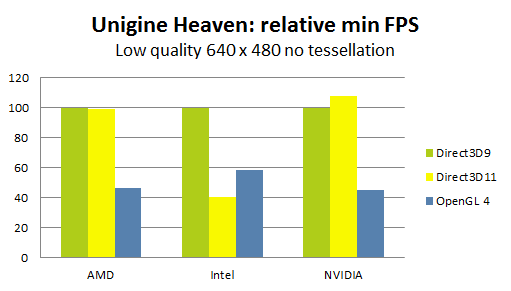

The minimum FPS is probably the most important number. If this number is too low, the user experience will be jaepodized.

Here, the shiny Direct3D 11 performances fade a little has the minimum framerate is lower on Direct3D 11 than Direct3D 9. On NVIDIA is seem that there is a specific performance bug on Direct3D 11 drivers.

Sadly the OpenGL implementations are again not performing well, especially on Valley where AMD and NVIDIA OpenGL drivers are 22% slower than the Direct3D 9 drivers.

Intel implementation seems to be the most balance here.

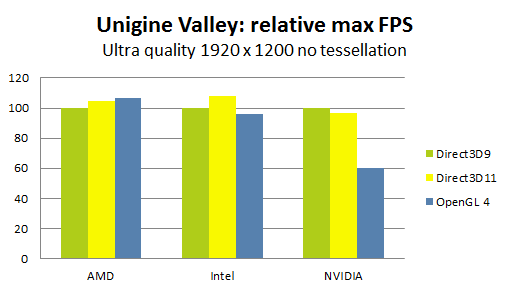

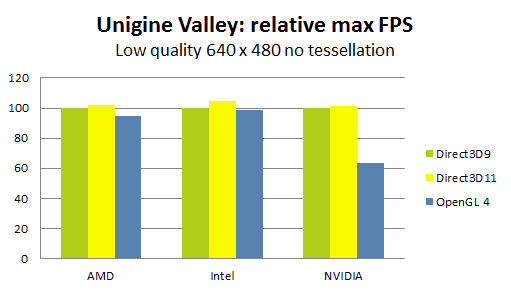

The maximum FPS doesn't matter much except that it's better when it is as close as possible to the average FPS. Having huge difference is just a waste of performance that bias the average FPS making it looks better without giving the user experience that should come with it.

Unigine at low resolution and quality, more CPU limited

Now we run the test in low quality and a small resolution hoping that the CPU becomes the bottleneck to see how the implementation behaves.

Looking at these results is seems that the level of performance of Intel drivers is very stable across APIs. It is possible that the HD4000 is simply too slow for these benchmarks for not hitting a specific GPU bottleneck. Our maybe the drivers are very optimise but I have a doubt about that. Considering optimizations of HD4000 drivers, picture the dream: A driver written for a Intel HD4000 can assume that the CPU will be an Ivy Bridge which means that the drivers can use AVX2 intrincis code or compile with AVX2 optimization enable. ♥_♥

The most striking results is that NVIDIA OpenGL implementation turns out to be particularly slow but this is not really a surprized. NVIDIA OpenGL implementation is about 25% and 32% slower than Direct3D 9 implementation, 30% and 33% slower than Direct3D 11 implementation on respectively Valley and Heaven. NVIDIA implementation is supposed to be the most robust which means that it is also probably the one which has the most workarounds and checkings to optimize all sorts of strange behaviours. It is very likely that NVIDIA implementation is the one doing the most work behind our back which affects our nicely crafted code.

AMD OpenGL implementation is about 7% and 9% slower than Direct3D 9 implementation, 8% and 13% slower than Direct3D 11 implementation on respectively Valley and Heaven.

For all ISVs, Direct3D 9 or Direct3D 11 makes very little differences, when it comes to CPU usage different API design doesn't seem to matter too much how maybe their design is too similar.

Here Intel performs best but actually all vendors performs very badly. We see that keeping a stable framerate with OpenGL is really hard. A possible explanation for that is OpenGL memory model which prevent OpenGL programmers and applications to control memory transactions. With OpenGL, we only provide "hints" hoping that the drivers will interpret them appropriately and do the transfers when it's the most wise to do them. Good luck with that! My first request for OpenGL remains Direct State Access, 4 years after the extension release..., but my second is a new memory model where the application, which is the only one to know what to do with the memory, will be able to manage itself the memory (allocation, transfer, access). Then, my hypothesis for this performance issue might be just wrong but what close to sure is that this issue conscerns every vendors so this is an OpenGL design issue, not a vendor specific issue.

Speaking of vendor specific issues, Intel seems to have a performance bug on its Direct3D 11 drivers.

Conclusion on performance

Building a conclusion without access to the code is quite challenging so I really don't want any of this post being seen as truth but instead as axis of thoughts. There are some different behaviours across vendors which makes me believe that Heaven is particularly optimized by the hardware vendors but not Valley. It is also possible that others factors are involved in these differences but I haven't seen them. For example, I can imagine that all the assets in Heaven can be store in GPU memory so that the benchmark doesn't need any data streaming during the program execusion. In Valley, the terrain is pretty large its geometry rendering is performed using a CPU based CLOD method which let me believe that there is a significant amount of data streaming.

Hence, either because the OpenGL implementations are generally slow or because streaming assets is slower with OpenGL, rendering with OpenGL is significantly slower than rendering with Direct3D 11 even with nicely crafted code like I expect Unigine to be. However, it appears that Intel OpenGL implementation is the one performing best or should I say the less badly.

When reducing resolution and quality, we see that NVIDIA OpenGL implementation seems to be the one that suffers the most of CPU overhead. NVIDIA has the reputation to provide the most robust implementation which I guess implies that they are making more work on the CPU side to make sure that applications are not using OpenGL the "funny" way.

Intel comes as a good surprized when it comes to performance. Obviously, the overall performance is not great because here we are speaking of an integrated chip but the performance differences with Direct3D 11 and OpenGL implementation is roughly 10% for Intel against about 20% for AMD and 30% for NVIDIA.